What exactly is AI and how is it different from machine learning? And how important, helpful but also dangerous is it?

Artificial intelligence (AI) is an umbrella term for the development of computers or systems that can perform tasks that normally require human intelligence. The goal of AI is to enable machines to learn, think and solve problems much like humans do. Machine learning is a subfield of AI that focuses on algorithms and techniques that allow machines to learn from data without being explicitly programmed. It is based on the concept of training models on sample data to make predictions or decisions. AI and machine learning have the potential to be helpful in many areas. They can be used in automating tasks, analysing big data, personalised medicine, autonomous driving and much more. However, the use of AI also poses potential dangers. These include ethical issues, privacy concerns, potential job losses and the possibility of unforeseen behaviour or errors in AI systems. It is important that we continue to develop AI and machine learning responsibly and carefully evaluate the impact on different aspects of life. Regulation, ethical guidance and responsible use are crucial to unleash the full potential of these technologies and minimise their negative impact.

What examples of AI use already exist in everyday life? Even examples that most people are not even aware of?

There are already many examples of the use of AI in everyday life, some of which most people may not be aware of. Here are some examples:

Voice assistants: Voice-controlled assistants such as Siri, Google Assistant and Amazon Alexa use AI algorithms to understand speech and answer user queries.

Personalised recommendations: Platforms like Netflix, Spotify and Amazon use AI to create personalised recommendations for movies, music and products based on user behaviour and preferences.

Online search: Search engines like Google use AI algorithms to generate search results based on relevance and user context.

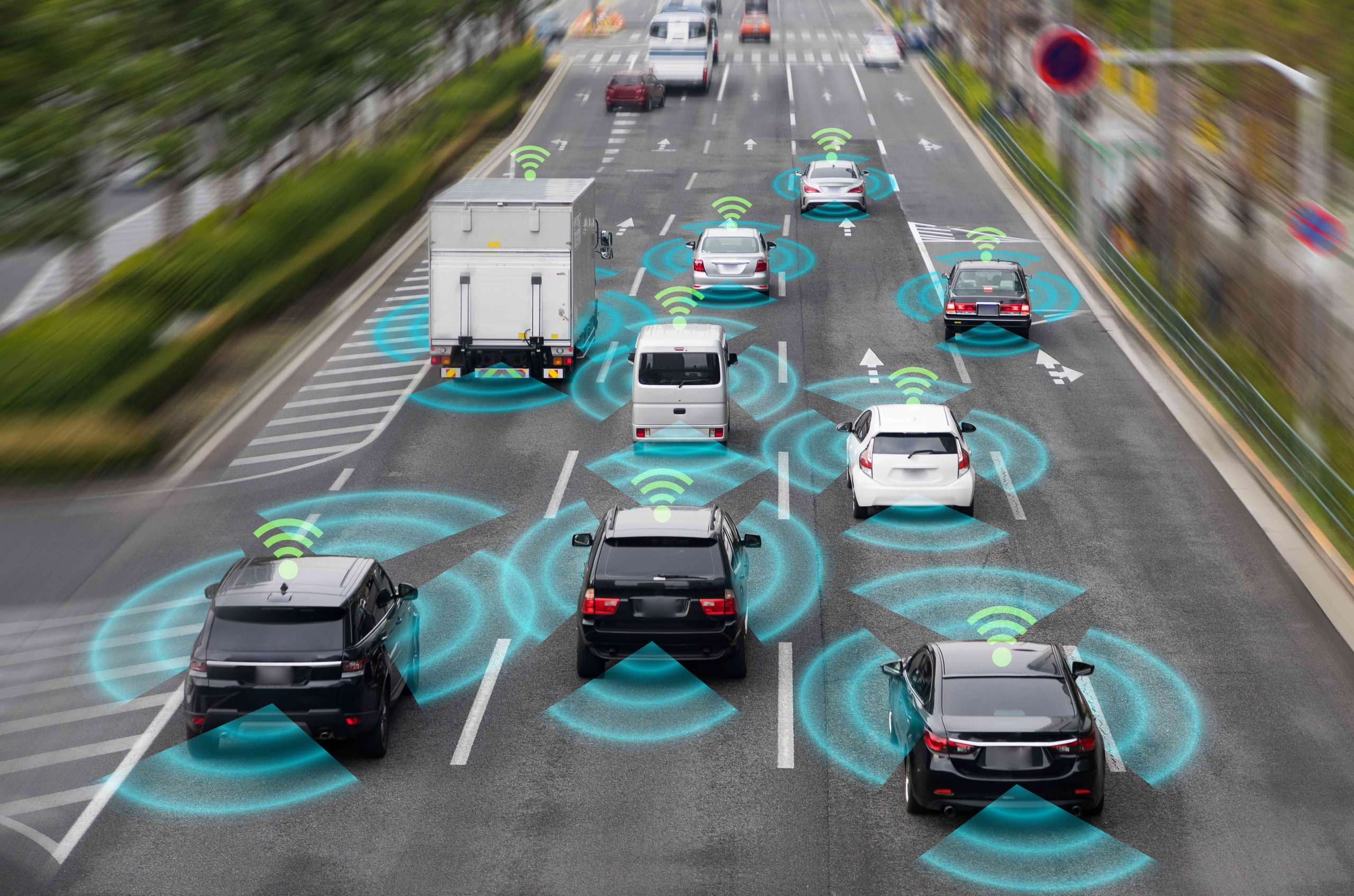

Image recognition: Social media like Facebook use AI to recognise faces in photos and tag people. AI is also used in automatic image recognition for security surveillance systems or to recognise objects in autonomous vehicles.

Spam filters: Email services use AI to filter out unwanted emails and detect spam.

Autonomous vehicles: AI plays a crucial role in the development of autonomous vehicles that can analyse sensor data, recognise traffic conditions and make decisions.

Medical diagnosis: AI is used to analyse medical images such as X-rays or MRI scans to help diagnose diseases.

Chatbots: Many websites use chatbots that can interact with visitors and provide automated customer support by answering questions or helping with website navigation.

These are just a few examples, and the use of AI is continuously spreading into different areas of daily life.

And does AI always tell the truth or should everything be verified?

AI does not always necessarily reflect the absolute truth, and it is important to critically question and verify the results. AI systems are designed to make decisions or predictions based on the data they have been trained with. However, they can also be influenced by incomplete or erroneous data or be prone to bias. It is therefore advisable to validate and verify the results of AI systems, especially for decisions with far-reaching implications. Careful monitoring, assessment of accuracy and human expertise remain important to ensure the quality and reliability of AI results. In addition, it should be noted that AI systems can also raise ethical issues. Algorithms can be trained on biased data and then reinforce these biases. Critically examining and incorporating ethical guidelines is therefore also important to avoid potential injustices.

What do you say about MoralMachine?

The Moral Machine is a platform developed by scientists to stimulate discussions about ethical dilemmas in the context of autonomous vehicles. On the website, users are presented with hypothetical scenarios in which an autonomous vehicle is faced with moral decisions, such as choosing between protecting passengers or pedestrians in a dangerous situation. The Moral Machine has contributed to the debate on the ethical aspects of autonomous vehicles and raised awareness of the difficult decisions that need to be made in such situations. However, there are also criticisms of the platform. Some argue that the scenarios presented are simplified and unrealistic, and that the complexity and multi-layered nature of real moral decisions are not adequately represented. Anyone can try out the Moral Machine test for themselves at https://www.moralmachine.net/hl/ . Ultimately, the question of programming ethical principles into autonomous vehicles remains a challenge. It requires extensive discussion and involvement of various stakeholders, including ethicists, technologists, legislators and society at large, to find appropriate solutions that meet society’s values and needs.

Note: the “interview” was conducted with ChatGPT. The questions were the unique questions to the bot that answers based on Artificial Intelligence